In 2022, a threat actor infiltrated Uber’s internal network by exploiting the company’s Slack platform. The attacker impersonated an employee and shared an explicit image, which is believed to have helped them escalate privileges and access sensitive information. The threat actor later confessed to the attack, revealing that they used social engineering techniques to easily bypass Uber’s security measures.

The 2024 Data Breach Investigations Report published by Verizon found that email-based attacks, such as phishing and pretexting, were responsible for 73% of breaches in this sector, making them the primary cause of security incidents.

The global cybersecurity market indicator ‘Estimated Cost of Cybercrime’ was projected to increase by a total of 6.4 trillion U.S. dollars between 2024 and 2029. So far social engineering has been the biggest cause of these above mentioned security breaches ranging across sectors. But with AI the efficacy of these attacks have risen manifold.

Social engineering is a cybercrime that tricks individuals into divulging sensitive information like passwords and credit card details or granting access to their computer systems for malicious software installation. Rather than directly hacking into a system, social engineers manipulate human error, exploiting vulnerabilities to compromise security.

It can be described as a psychological manipulation. Social engineering is among the most common types of cyberattacks that bad actors utilize to exploit organizations, personas and businesses.

Some of the most common types of social engineering attacks includes, ransomware attacks, phishing, CEO fraud, pretexting and so on. These cases of social engineering are not just limited to businesses and organizations but also included notable personalities,

In January 2024, explicit AI-generated deepfake images of American musician Taylor Swift were widely disseminated on social media platforms. One post reportedly garnered over 47 million views before its removal.

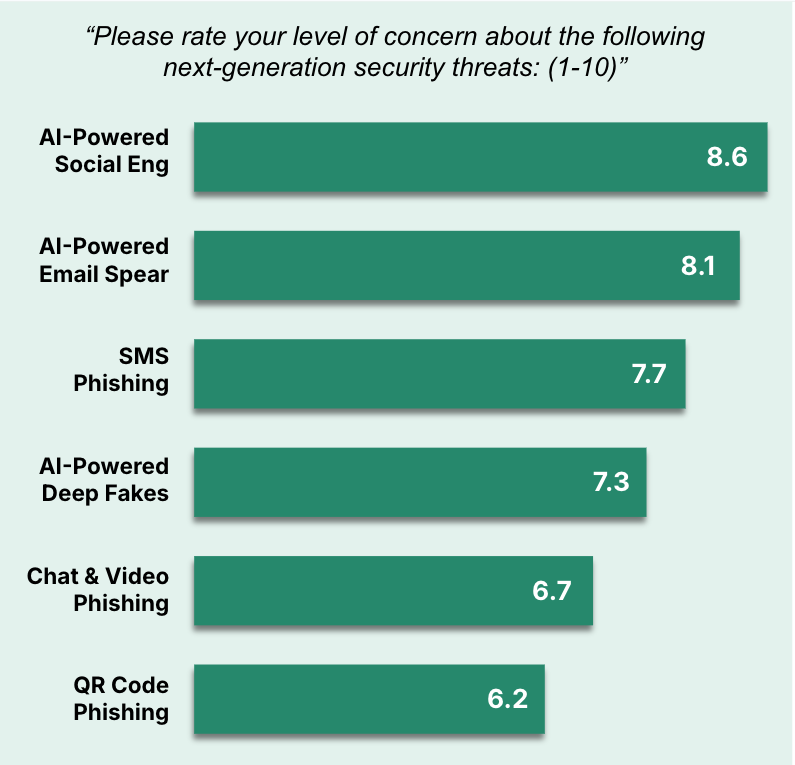

As digital footprints grow, AI-powered attacks are becoming more sophisticated, making them highly personalized, deceptive, and often irreversible.

While AI-enabled production of highly realistic deepfake videos and audio recordings tops the list of cyberattacks, phishing campaigns and voice imitation for vishing do not stand far behind.

Attackers can instantly generate lifelike AI personas i.e. deepfake versions of businessmen, CEOs, or even an employee of a company. These AI-driven identities can make calls, send emails, or text your team with messages that sound exactly like the real thing. Powered by open-source LLMs and trained on public data, they are fine-tuned to deceive and slip past security defenses effortlessly.

This company, however, is convinced that generative AI can be leveraged to counter this emerging threat. By utilizing appropriate models and data, they can simulate realistic AI attacks, thereby training employees to identify threats, triage suspicious activity in real time, and expose risk before it results in loss.

During his tenure as the CEO of Attentive, Brian Long, CEO and co-founder of Adaptive experienced many cyber attacks first hand, while his co-founder Andrew Jones was alarmed by the growth in AI scams targeting high profile executives in his previous company TapCommerce.

Long recounted a distressing incident where a new employee at Attentive fell victim to a scam, tricked into authorizing a substantial fraudulent business purchase via text message by an attacker impersonating him. The situation escalated when the employee reported the incident to their manager, who was subsequently deceived by the same attacker. He expressed disbelief that there wasn’t a more effective method to prevent such attacks, highlighting how neither employee was prepared for this advanced form of cyber threat.

Therefore, in early 2024. Adaptive Security was born to significantly reduce the risk of AI-powered attacks.

As the co-founder and CEO stated, “The rise of AI-powered social engineering represents one of the most urgent cybersecurity threats of our time.”

To counter this, the company launched two products : Adaptive Phishing and Adaptive Training to provide a proactive approach to cybersecurity.

Adaptive Phishing uses generative AI to simulate realistic cyber attacks across email, SMS, chat, and voice. Companies can customize these scenarios with deepfake technology, open-source intelligence, and real attack tactics to test and strengthen their security defenses.

Adaptive Training equips employees with the skills to recognize and combat evolving threats like deepfakes, smishing, and social media attacks. Delivered in concise 3-7 minute modules, training is optimized for both mobile and desktop, with content personalized for each organization.

Adaptive is staying ahead with AI-driven defense. Deepfake persona simulations create hyper-realistic phishing tests across voice, SMS, and email, mimicking executives and coworkers to expose security gaps. If an employee falls for it, AI-powered training delivers instant, personalized learning, keeping engagement high with 4.8/5-rated bite-sized modules. Real-time threat triage analyzes suspicious messages instantly, giving security teams live insights, while GenAI-powered content creation helps businesses generate custom security training in seconds.

With AI-driven risk scoring, every click, call, and report feeds into a live vulnerability map—helping organizations act before attackers strike.

The company is not ahead with just its AI-driven defense but also in news.

The company announced their $43 million investment round, led by Andreessen Horowitz (a16z) and the OpenAI Startup Fund.

The investment marks OpenAI’s first outside backing of a cybersecurity firm, which makes it even more relevant why cybersecurity deserves the attention it deserves. Executives from Google, Workday, Shopify, Plaid, and Paxos, among others, also participated in the funding round, along with Abstract Ventures, Eniac Ventures, CrossBeam Ventures, and K5. With this fund, the company plans to focus on growing their research and development team to counter the imminent threat in the cybersecurity ecosystem.

Their customers include some of the biggest names across industries, from one of the largest bank payment managers in the U.S. to major hedge funds, venture capital firms, regional and national banks, top tech companies, and critical healthcare systems. Notable clients include the Dallas Mavericks, First State Bank, and BMC, among others.

📣 Want to advertise in AIM Research? Book here >

Cypher 2024

21-22 Nov 2024, Santa Clara Convention Center, CA

A Vendor Briefing is a research tool for our industry analysts, and an opportunity for a vendor to present its products, services and business strategies to analysts who cover the vendor specifically or a related technology or market.

AIM Research encourages technology vendors and agencies to brief our team for PeMa Quadrants, when introducing a new product, changing a business model, or forming a partnership, merger, or acquisition.