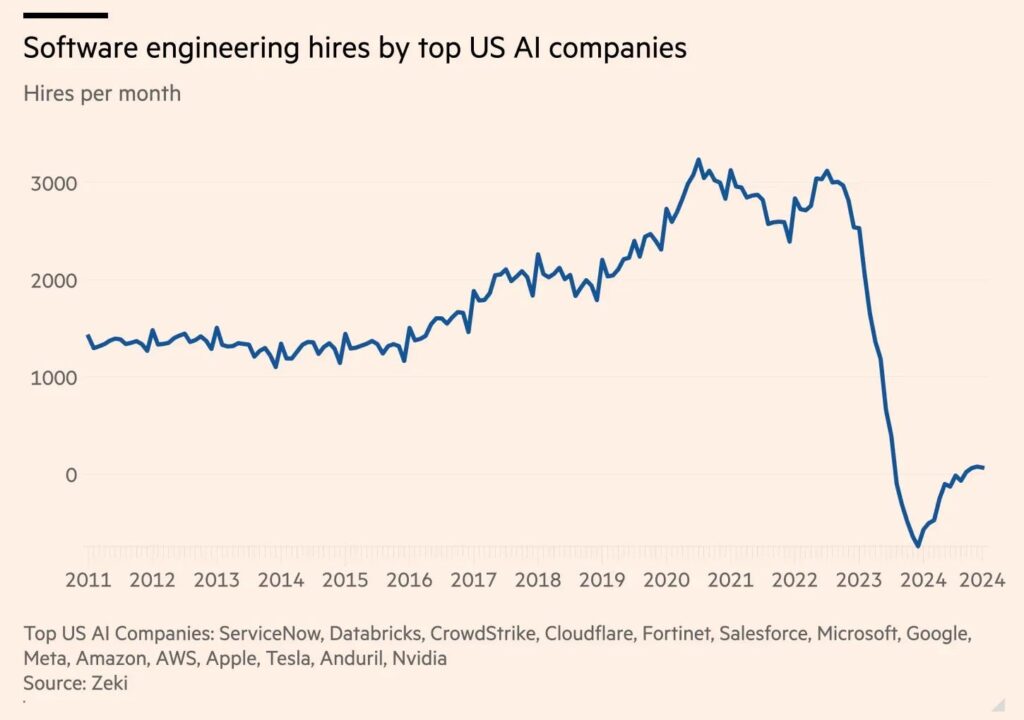

A recent graph circulating on LinkedIn shows major tech giants slashing software engineering headcount at rates that would terrify any fresh CS graduate. Instantly, fingers flew to AI automation as the culprit. But this narrative—AI devouring entry-level jobs—is a convenient red herring. In reality, these companies over-extended themselves in 2021–22, carrying massive R&D payrolls that never translated into proportional ROI. When macroeconomic headwinds hit, the first to go were the thousands of brand-new grads deemed “non-critical.” Blaming AI masks the real issues: reckless expansion, bloated bureaucracy, and short-sighted management.

Anyone scrolling through Twitter or LinkedIn these days will see the same refrain: AI is stealing all the junior developer jobs. Yes, code-writing models like Cursor, Copilot, and ChatGPT have emerged in the past year, but they are far from replacing human engineers at scale. Cursor’s release was barely a year ago—hardly enough time to hollow out an entire generation of entry-level roles. Furthermore, AI tools still struggle with context, architecture, and complex debugging. They can automate boilerplate, but a junior engineer’s real value often lies in domain knowledge, teamwork, and learning adaptability—areas where AI is still years away from parity.

Yet the “AI killed my job” narrative thrives because it offers a neat storyline: shiny new technology arrives, and the old guard falls. It absolves executives of accountability, allowing them to wave away legitimate concerns about poor financial discipline. Citing AI as the cause of job cuts becomes a PR shield against criticism. But if you look beyond the headlines, you’ll find bigger culprits: aggressive hiring during low-interest rates, massive R&D teams absorbing capital with no tangible output, and a sudden pivot when markets tightened in 2023–24.

Between 2020 and 2022, tech companies enjoyed historically cheap capital. Investors were eager to fund “growth at all costs,” and startups and incumbents alike hoarded talent. Headcount at FAANG and similar behemoths surged. Entire campuses were staffed with hundreds of door-badge-holding engineers who lacked clearly defined project roadmaps. R&D budgets ballooned, and managers hired junior engineers in droves, often without senior mentorship or clear paths to impact. The assumption was that more bodies equaled more innovation.

When the Federal Reserve tightened interest rates in 2022, it flipped the script. Suddenly, spending had to demonstrate measurable ROI. Projects that had been running on autopilot were scrutinized. Cost centers bloated beyond verification were slashed. And who bore the brunt? The new graduates—those with the least bargaining power, the least ingrained in existing projects, and the least visibility to senior leaders. Thousands of CS grads who joined teams eager to claim “diversity of thought” or “future-proofing” were shown the exit door the moment budgets tightened. This wasn’t a targeted AI strategy; it was a knee-jerk reaction to macroeconomic stress combined with years of profligate spending.

Look at any public filing from these tech giants, and you’ll see a massive line item for “Research & Development.” In theory, R&D drives product roadmaps and future revenue streams. But in practice, it often serves as a dumping ground for headcount. Senior executives hire teams of engineers, data scientists, and product managers with lofty promises—“We’ll pioneer the metaverse,” “We’ll build autonomous systems,” “We’ll lead in AI.” But many of these initiatives never cross the chasm from prototype to production. Instead, they generate a steady burn: fancy offices, dedicated pods, endless cloud compute bills, and no clear path to monetizable features.

This R&D black hole can be justified only when capital is virtually free. When rates rose and investors demanded accountability, these bloated labs were unsustainable. Decision-makers drew up lists of low-impact teams—often those brimming with junior engineers—and slashed them. The optics of blame fell on AI, but the logic was cost-optimization first. These centers of innovation became the first to be cannibalized, revealing that much of the “R” in R&D was lip service.

To legitimize cuts, executives frame the narrative around AI replacing human roles. Yet a closer look at public statements shows they are eliminating entire subteams, not redeploying them to AI tool development. If AI were truly the driver, we’d see those junior engineers reskilling into roles like data curation, prompt engineering, or model evaluation. Instead, headcount goes to zero, and recruitment posts vanish overnight. The simplest explanation is that these roles were never critical to begin with. AI becomes scapegoat; senior leaders deflect blame while talking heads bang the drum of “digital transformation.”

Meanwhile, the juniors who remain on other teams face burnout and exploitation. They pick up the slack for departed colleagues, working 60-hour weeks to meet deadlines that were set assuming twice the staff. R&D managers tout “agile teams” and “lean methodologies” as justification for lean headcount, but what’s really happening is that inexperienced engineers are forced to learn on the fly, taking the place of teams laid off in the name of AI. This kills morale, slows down legitimate innovation, and sets up a feedback loop: less productivity leads to more layoffs.

Everyone praises the “lean developer” who can write production-grade code end to end. But the reality is that truly “lean” teams rely on experienced senior engineers mentoring juniors. They don’t simply drop 5 juniors on a sprint to cover 20 senior roles. When companies proclaim they’re slimming down to pivot toward AI, they often mean “get rid of the juniors so we can bluff profitability.” Senior engineers remain insulated by higher compensation and specialized skill sets—often in core AI or cloud infrastructure—whereas juniors, whose salary is a fraction of a senior’s, become easy collateral damage.

This “leanness” is often a guise for cost-cutting. By firing novices, companies shed lower-cost employees that don’t yet generate commensurate output. They then claim, “We’re a flatter organization now, more efficient.” But efficiency doesn’t translate to quality. Overstretched teams, absence of peer review, rushed code, and more technical debt all accumulate. In a few quarters, these same companies will have to hire again or bring back experienced contractors at premium rates to clean up the mess—a lose-lose for everyone except the CFO who balanced the budget last quarter.

Curiously, most layoffs target large incumbents. Many early-stage startups are still hiring, albeit selectively. They can’t afford to carry non-essential roles, so they focus on “full-stack engineers” who write code, design UX, and own deployment. These roles are anything but junior; they demand 3–5 years of experience, sometimes even in operations or product. The result? A cold shoulder for brand-new grads. They do not meet the “impact threshold” startups demand because they lack depth. Even in a booming startup ecosystem, many founders choose to build around senior generalists rather than cultivate fresh talent through apprenticeships.

Startups prefer to hire for specialized skills: cloud security, MLOps, or front-end frameworks that move quickly. They don’t have the luxury of training juniors. This echoes a broader trend: if you’re not “immediately deployable,” you’re not deployable. This system starves the talent pipeline. Meritocracy dies as the barrier to entry creeps up: new grads are forced into unpaid internships, bootcamps, or unpaid open-source contributions to pad their resumes. This perpetuates inequality—if you can’t afford to intern for free, you can’t break in.

AI gets the clickbait, but the downturn began when the Fed hiked rates in 2022. Higher interest rates make capital expensive, forcing startups and large companies to limit burn and focus on profitability. When investors demand EBITDA, they scrutinize every line item, and R&D becomes a prime target. That’s true in both private and public markets. If a startup can’t articulate a path to profits, funding falls through. If a public company can’t show imminent revenue from its R&D pipelines, analysts downgrade the stock.

In that context, layoffs are inevitable. It’s not AI that’s causing them; it’s a macroeconomic squeeze. Big Tech is simply executing the playbook they preach to founders: “Show us the money.” But when the roles cut are entry-level and early-career positions, the labels on the layoffs matter. “Pivoting to AI” sounds visionary, whereas “cost-cutting” sounds desperate. The headlines are more appealing when you promise a future of autonomous bots instead of admitting you mismanaged your budgets for two years.

For every news story proclaiming AI’s triumph, there are countless disillusioned CS grads wondering if they’ll ever land that first break. The university placement cell that once brokered internships and entry-level positions now sends newsletters of rejections. Students who spent four years learning data structures and algorithms are told, “You’ll figure it out.” But there’s no “figure out” if every single company demands three years of production experience or proof of “AI project deployments.” The harsh truth: these layoffs are not leveling up the workforce; they are creating a generation of underemployed talent.

The psychological toll is enormous. New grads compare job boards, hop on Reddit threads, and see their peers hired into coveted roles before the slump. Meanwhile, they’re asked to complete take-home assignments pro bono. They spend nights building side projects, only to receive “Thanks, but no thanks” emails. The scarcity mindset drives them to accept exploitative internships or low-pay freelancing gigs, perpetuating a cycle of undervaluation. Many will abandon software development entirely, switching to consulting or non-tech roles—an immense loss for innovation in the long run.

It’s time to call out the hollow narrative around AI-driven layoffs. Senior executives should face scrutiny for the hiring sprees they greenlit during the easy-money era. Boards must question CEOs who herald upskilling programs as a solution while quietly letting entire junior cohorts go. Venture capitalists need to own their role in fueling unsustainable growth—encouraging companies to chase top-line metrics at the expense of long-term stability.

Universities and coding bootcamps could also do more—not just churn out graduates but partner with companies to create realistic apprenticeships. Instead of feigning shock at AI’s impact, curriculum designers should teach students about software economics, budgeting, and the life cycle of startup funding. Graduating with a degree in computer science should not mean a free pass into a sinking ship; it should mean being equipped to navigate a turbulent landscape.

AI undoubtedly reshapes industries, but it is not the sole executioner of entry-level software roles. The real culprits are reckless expansion, gargantuan R&D budgets with minimal ROI, and macroeconomic retrenchment. Blaming AI for these layoffs is a comfortable narrative for leaders who can’t—or won’t—admit to mismanagement. As long as we allow AI to serve as a convenient scapegoat, we ignore the systemic failures leaving a generation of new CS grads stranded. The tech industry must confront its own flaws—costly miscalculations, overhiring during frothy markets, and a rampant “growth at all costs” mentality—before it can credibly claim that AI is the enemy. Until then, expect more graphs to terrify grads and more hollow excuses disguised as visionary strategy.

📣 Want to advertise in AIM Research? Book here >

Cypher 2024

21-22 Nov 2024, Santa Clara Convention Center, CA

A Vendor Briefing is a research tool for our industry analysts, and an opportunity for a vendor to present its products, services and business strategies to analysts who cover the vendor specifically or a related technology or market.

AIM Research encourages technology vendors and agencies to brief our team for PeMa Quadrants, when introducing a new product, changing a business model, or forming a partnership, merger, or acquisition.