AI hardware is at an inflection point, and Andrew Feldman sees the shift with absolute clarity. The founder and CEO of Cerebras Systems has long argued that AI’s demand for compute power cannot be met by conventional GPU-based architectures. As models grow larger and more complex, the industry must move beyond the constraints of traditional chips. His answer? Wafer-scale computing.

GPU’s Are Not Built For AI

For years, Nvidia has dominated AI hardware with its GPU-based ecosystem, which thrives on parallel computing. But GPUs were never built for AI; they were adapted for it. The more AI scales, the clearer the inefficiencies become. Moving data on and off the chip—one of the most power-intensive operations in AI processing which creates bottlenecks that hamper both efficiency and performance.

The standard workaround has been to use clusters of GPUs, connected through high-speed interconnects, to distribute the workload. But this approach is increasingly hitting its limits. Interconnect overhead increases latency, consumes excessive power, and forces engineers into a constant game of optimization to compensate for hardware inefficiencies. Even with advancements like Nvidia’s NVLink and other interconnect solutions, the underlying issue remains: distributing a workload across multiple discrete chips introduces unavoidable inefficiencies that scale poorly as models grow larger.

“In interactive mode, milliseconds matter. What’s held true for Google for years is that you can destroy user attention with milliseconds of delay,” Feldman points out. “In some cases, being the fastest doesn’t matter—we’ll call those batch jobs where maybe the cheapest solution matters. But in other domains, there is no product if you have to wait eight minutes for an answer.”

Why Wafer-Scale Computing is the Future

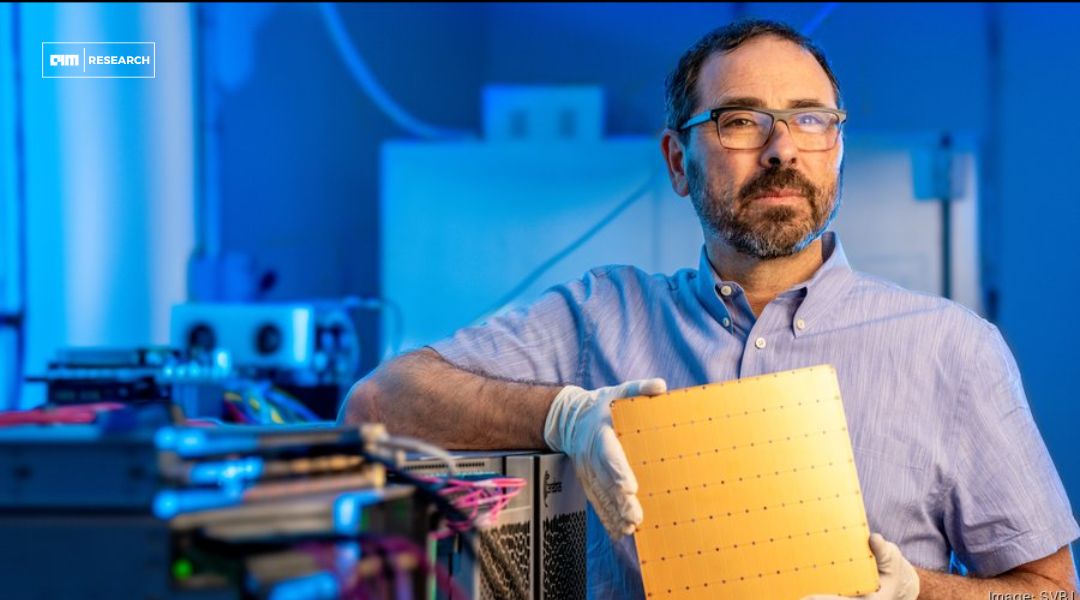

Feldman’s argument is simple: wafer-scale computing sidesteps the limitations of traditional chips. By using an entire silicon wafer as a single processor, Cerebras eliminates the need for interconnects between smaller chips, reducing latency and increasing efficiency. It also keeps data within a unified silicon domain, significantly cutting down energy consumption.

One of the biggest concerns with wafer-scale computing has always been yield. In traditional chip manufacturing, wafers are cut into smaller chips because large chips have a higher probability of defects. If a defect is present, the entire chip is often discarded or sold as a lower-tier product in a process called binning. Feldman and his team tackled this issue by rethinking the architecture entirely.

“The way your mother might take a cookie cutter and cut out cookie dough—that’s how chips are usually made,” Feldman explains. “But there are always naturally occurring flaws. The bigger the chip, the higher the probability you hit a flaw.”

Cerebras tackled the challenges of wafer-scale computing by designing a processor with hundreds of thousands of identical tiles. If a tile is defective, the system seamlessly deactivates it and reroutes computation, much like redundancy mechanisms in memory manufacturing. This innovative approach ensures high yields despite the wafer-scale design.

The motivation behind Cerebras’ wafer-scale architecture is straightforward: AI inference is fundamentally a memory bandwidth problem. Traditional GPUs are constrained by their ability to move data quickly enough, whereas Cerebras’ design maximizes on-chip memory and bandwidth. With 7,000 times more memory bandwidth than a GPU, Cerebras delivers up to 70 times the inference performance. The result? Industry-leading speeds for AI applications—powering the fastest chat responses for Mistral AI, the fastest search for Perplexity, and the most efficient reasoning with DeepSeek AI R1.

The advantage of this approach is not just theoretical. In real-world AI workloads, moving data efficiently is often more critical than raw compute power alone. A wafer-scale chip reduces memory bottlenecks, allowing AI models to operate with dramatically lower power consumption and higher throughput compared to traditional GPU-based clusters.

The Myth of Nvidia’s CUDA Lock-In

One of the biggest misconceptions in AI hardware is the idea that Nvidia’s dominance is unshakable because of CUDA, its proprietary software ecosystem. Feldman dismisses this outright, at least in the realm of inference.

“In inference, the CUDA lock-in argument is not real,” he states bluntly. “You can move from OpenAI on an Nvidia GPU to Cerebras to Fireworks on something else to Together to Perplexity with 10 keystrokes.”

CUDA’s real advantage has historically been in training workloads, where its ecosystem is deeply entrenched. But in inference where cost, power efficiency, and latency matter most Nvidia’s dominance is far less assured. “The cost of inference has multiple components—power, space, and compute. Right now, GPUs doing inference are 95% wasted. Over time, we’ll get better at this, improving utilization and efficiency.”

Scaling Laws and the Role of Test-Time Compute

As AI models grow, so too does the cost of training them. But training is only part of the equation—test-time compute (inference) is where efficiency matters most. Feldman argues that AI’s future hinges on improving efficiency at inference rather than just focusing on raw training power.

“The industry talks a lot about training compute, but inference is where the real challenge lies,” he says. “Inference, especially for generative AI, has some of the most demanding requirements in terms of communication and latency.”

AI’s progress follows scaling laws, meaning that as models get larger, they require exponentially more compute power. Traditional hardware is already struggling to keep up. Wafer-scale computing, by removing interconnect constraints, provides a more viable long-term solution. The scale of this problem is evident in today’s massive language models, where inference latency and power consumption are becoming the dominant costs.

Can Wafer-Scale Chips Disrupt Nvidia?

Nvidia is not going anywhere, but its dominance is no longer a certainty. Wafer-scale computing is emerging as a legitimate competitor, particularly for AI inference. Some analysts argue that trillion-parameter models, which would be prohibitively expensive on traditional chips, will become feasible with wafer-scale architectures.

Feldman is betting on it. “The market is growing, and we’ll have a piece,” he says. “Some very big companies will be built in this 100x growth.”

His confidence is not based on speculation but on tangible engineering advancements. Since launching its inference engine in August, Cerebras has consistently demonstrated superior performance across multiple AI models. The company has already inked deals with major AI labs and cloud providers, proving its approach is not just viable but commercially competitive.

Traditional GPUs, once the cornerstone of AI computing, are now showing their limitations. Feldman’s takeaway is clear: when you make as many decisions as leaders in AI hardware do, being wrong is inevitable. Reflecting on past decisions, he acknowledges one of his biggest mistakes: resisting water cooling when his co-founder, JP, first proposed it in 2016. At the time, no one else in the industry was doing it, and Feldman fought against the idea. But JP was right. In just a few years later, Google adopted water-cooled TPUs, and now even Nvidia sells only water-cooled AI parts.

The key is not avoiding mistakes, but recognizing them early and adapting. AI’s relentless pace of innovation punishes rigidity. Success in wafer-scale computing or any transformative technology demands not just vision and experience, but also the humility to acknowledge when the best path forward wasn’t the one you initially chose.