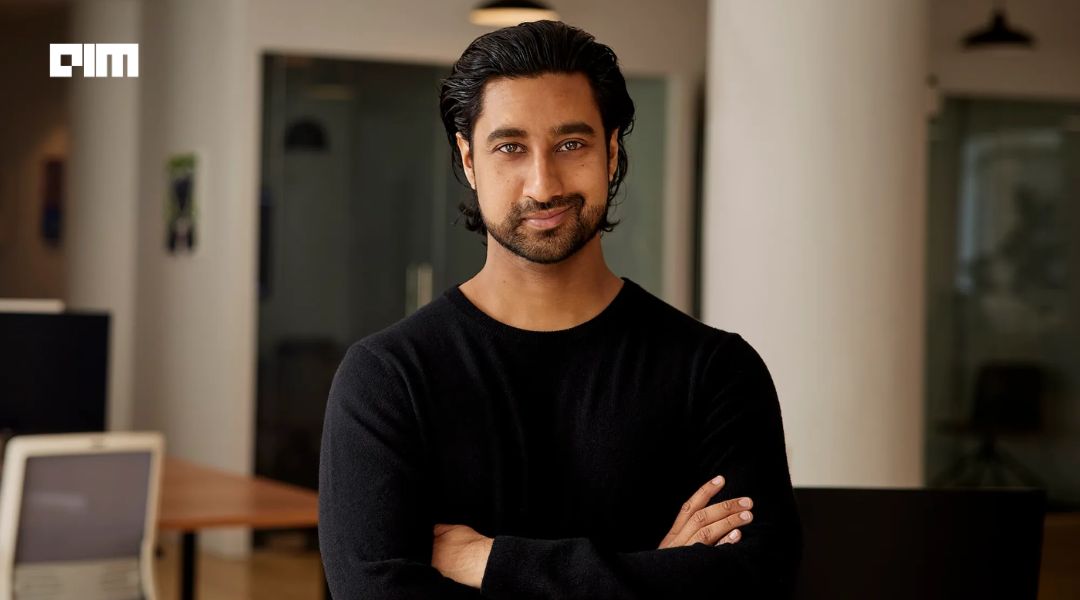

Liran Hason co-founded Aporia in 2019, he set out with a clear mission: to provide businesses with reliable, secure, and trustworthy AI solutions. Drawing on his experience as a machine learning architect at Adallom (acquired by Microsoft) and investor at Vertex Ventures, Hason saw firsthand the risks associated with machine learning (ML) models in production environments. These experiences inspired the creation of Aporia, a full-stack AI observability platform designed to monitor, explain, and improve machine learning models, ensuring the safe and responsible use of AI.

Aporia’s mission centers on empowering organizations to trust their AI by offering a comprehensive observability solution that tackles the challenges of bias, performance degradation, and data drift. The platform’s unique features, such as real-time dashboards, customizable monitors, and explainability tools, make it an essential tool for data science teams working across industries. Companies are able to gain clear visibility into their AI models, ensuring that their decisions are transparent and accountable.

Key Products and Services

Aporia’s product suite addresses some of the most critical challenges faced by companies deploying AI solutions. The platform includes several core components:

- Model Monitoring: It detects issues such as data drift, model bias, and performance problems in production models. With over 50 customizable monitors, Aporia allows businesses to keep their models running optimally.

- Explainable AI: One of Aporia’s standout features is its explainability tools, which provide detailed insights into why a model makes certain decisions. This transparency helps businesses build trust with stakeholders and regulators, ensuring AI systems operate fairly.

- Guardrails for Multimodal AI: Aporia’s Guardrails technology is pioneering in the industry, particularly for video and audio-based AI applications. It tackles critical risks such as hallucinations, compliance violations, and off-topic responses, ensuring safe and responsible AI deployment.

These solutions have gained the trust of several high-profile clients, including Bosch, Lemonade, Levi’s, Munich RE, and Sixt. Aporia’s platform is used in various applications such as fraud detection, churn prediction, credit risk assessment, and demand forecasting. As AI becomes more pervasive, Aporia’s role in ensuring the integrity of machine learning models continues to expand.

Growth Journey

Since its founding, Aporia has seen remarkable growth. In April 2021, the company raised $5 million in seed funding, followed by a $25 million Series A round in February 2022, led by Tiger Global. With a total of $30 million raised to date, Aporia has scaled rapidly, experiencing a 600% increase in customers between 2021 and 2022. This growth has been fueled by the company’s ability to adapt to the diverse needs of industries such as finance, e-commerce, and healthcare.

Aporia has also earned recognition from industry leaders. The company was named a “Next Billion-Dollar Company” by Forbes and selected as a “Technology Pioneer” by the World Economic Forum in 2023. These accolades reflect Aporia’s status as a leader in the AI observability and responsible AI space.

Addressing Emerging AI Risks

A key differentiator for Aporia is its focus on mitigating the risks associated with AI adoption. The company has been at the forefront of addressing issues like AI hallucinations and prompt injection attacks, which can jeopardize the reliability of AI systems. Aporia’s Guardrails technology offers real-time oversight and monitoring of AI models, ensuring that outputs are accurate and free from bias. This is particularly important in generative AI applications, where models like OpenAI’s GPT-4 can sometimes produce unreliable or nonsensical data.

Hason has coined the term “GenAI Chasm” to describe the challenges businesses face when moving AI projects from pilot phases to full-scale production. By providing robust security layers and customizable AI Guardrail policies, Aporia helps enterprises bridge this gap, ensuring that their AI deployments are both secure and scalable.

“Aporia, we are the practical guardrail,” says Hason. He emphasizes that AI has the potential to be one of the greatest technological advancements but warns that its risks should not be underestimated. “I do think AI could be the best thing that happened to humanity, really. I think it could save tons of lives, it could make our lives so much better. On the other hand, I agree AI could be the worst thing to ever happen to humanity as well.”

Future Goals and Vision

Looking ahead, Aporia has ambitious plans for expansion. The company aims to triple its team size, growing from 21 to 60 employees, and increase its presence in the U.S. market. Aporia is also focused on enhancing its platform to support a wider range of AI models, including computer vision and natural language processing (NLP) applications. The company continues to develop advanced bias detection tools and expand its integration capabilities, making its platform even more versatile.

Aporia’s leadership remains committed to promoting responsible AI. By developing advanced guardrails and monitoring systems, the company is helping organizations navigate the evolving landscape of AI regulations and ethical considerations. As businesses continue to adopt AI at scale, Aporia’s role as a practical, secure solution for AI observability will only grow in importance.

As Liran Hason notes, “Once you have regulation in place, companies will need to have the right tooling and the right ways to actually implement these policies. Aporia, we are the practical guardrail.”

As AI continues to transform industries, Aporia’s comprehensive, flexible approach to AI observability will be instrumental in helping companies scale their AI initiatives from pilots to full-scale deployment.

In Liran Hason’s own words: “I think AI could be the best thing that happened to humanity. It could save tons of lives, make our lives better. But on the other hand, it could be the worst thing to ever happen to humanity as well. That’s why companies like Aporia need to step up, providing the right guardrails to ensure AI’s positive impact.”